Ethical dilemmma of self-driving cars

Self-driving technology is slowly making its way into the vehicular industries, with self-driving cars from Tesla and Cadillac already cruising the streets. According to a survey conducted by WHO in 2016, about 1.35 million people die in road accidents yearly and about 90% of these accidents were attributed to human error. Self-driving technology seems like the perfect solution to this problem due to its ability to minimize human error. It also aids in easing traffic and increasing fuel efficiency. But this technology comes with its own set of challenges.

Let us consider a scenario in which we are travelling in a self-driving car on a highway when all of a sudden a large object falls off the truck moving in front of you. We are boxed in from all sides and a collision seems unavoidable. Now the car has to make a decision; to either swerve to hit the SUV on the right or hit the motorcyclist on the left or let the heavy object hit you. We are sacrificing ourselves and causing others no harm if we let the object hit us, minimizing danger to human life by swerving right since SUVs have a high passenger safety rating or severely injure the motorcyclist if we swerve left. When a human is driving and encounters this scenario, they are given some leniency because any decision that was taken at the time would be a panicked response to the situation with no mal intentions. Machines, on the other hand, are not given the treatment because they are instructed by the programmers well in advance, which might come across as premeditated homicide.

Now consider a motorcyclist is wearing a helmet instead of the SUV on the right while the one on the left is not wearing one. Here if we go with the principle of minimizing harm, the car should swerve right and hit the motorcyclist wearing the helmet. This is penalising them for obeying traffic rules. If we instead choose to swerve left, we will cause greater injuries to the motorcyclist on the left which goes against our principle of minimizing harm. Such decision making by the machine might sometimes compromise our safety in the name of minimizing harm.

People think ethically in principle, but in practice, they behave more selfishly. So between a car which would always save as many lives as it can and a car saving us at any cost, many people prefer the latter. Ironically, the same people will expect other people to buy cars from the first type. By making the individually rational choice of prioritizing their safety, they may collectively be diminishing the common good i.e., minimizing total harm.

I thought that the evolutionary approach to training these models was the best way to go forward to solving these problems. Similar to how we raise children. We can tell them a stove is hot, but we cannot innately protect them from everything hot. That is learned through self-exploration and correcting themselves. The same approach can be used for machines. This machine training through reinforcement (Reinforcement learning)can be monitored through a checkpoint system. We input several scenarios to the machine and then giving appropriate feedback for its solutions allowing it ‘to learn’ and correct its ‘mistakes’. Collect data from car manufacturers regarding car crashes and mistakes generally committed by the drivers which can be avoided.

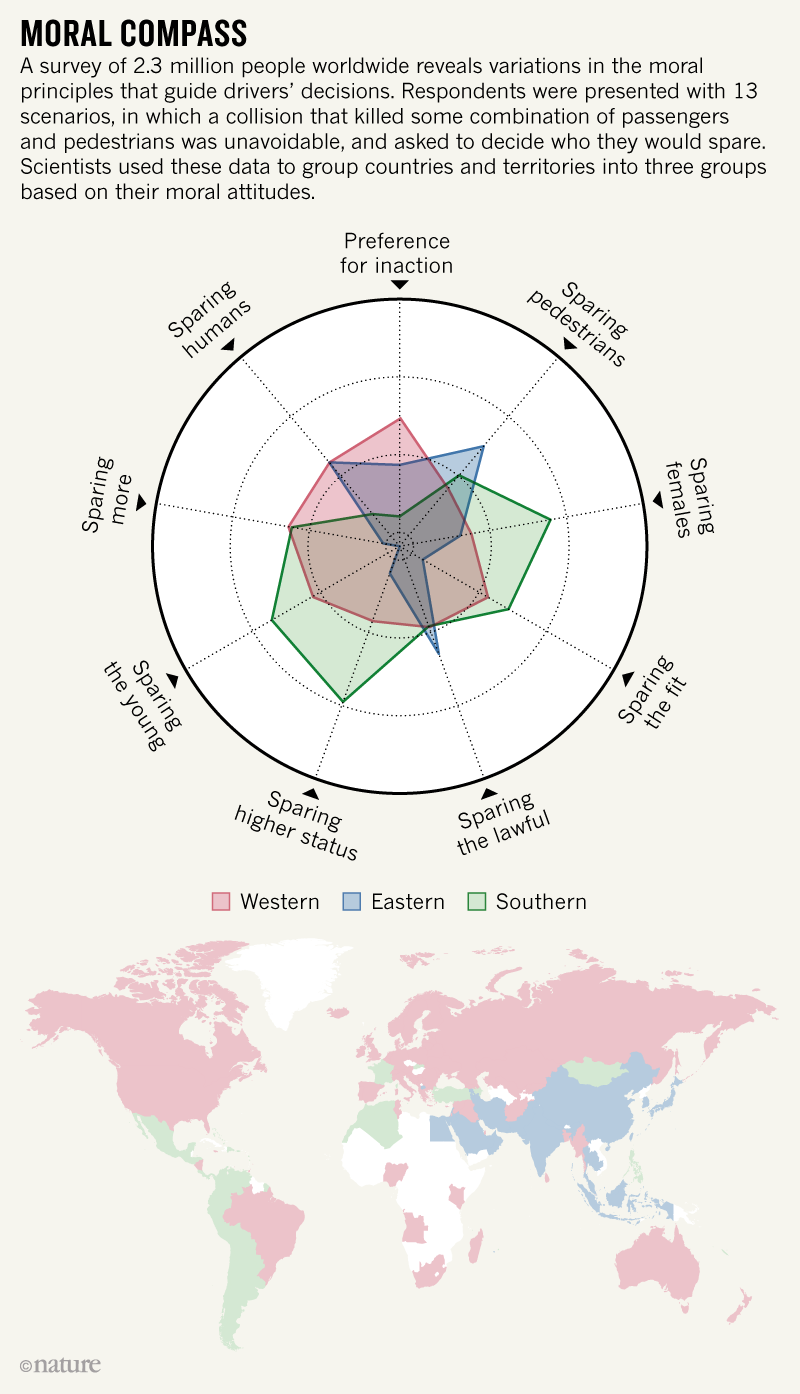

There are several other challenges. Moral choices are not universal; they differ from country to country. This might lead to bias based on gender, race, age, job, the economic prosperity of the country, etc to name a few. In cases of unavoidable death, in countries where economic inequality is high like in Columbia, people prefer to kill the homeless as opposed to the executives; people in countries with strong government institutions would rather ‘punish’ those who do not follow traffic rules, etc. Here, in India, the killing of animals such as cows is unacceptable, no matter the scenario. We have a long way to go to solve the ethical dilemmas which come with self-driving technology. Almost every solution has its drawbacks and trade-offs. We can only attempt to reduce the number of problems and this is done only through research and asking the right questions.

References:

- TED talk on the ethical dilemma od self-driving cars

-https://www.theglobeandmail.com/globe-drive/culture/technology/the-ethical-dilemmas-of-self-drivingcars/article37803470/

-https://www.theverge.com/2018/10/24/18013392/self-driving-car-ethics-dilemma-mit-study-moral-machine-results